تدوين أوه الكبيرة

في الرياضيات وعلم الحاسوب، والمجالات ذات الصلة ، تدوين أوه الكبيرة big O notation (المعروف أيضا باسم big O notation، تدوين لانداو Landau notation، تدوين باخمان-لانداو Bachmann–Landau notation، والتدوين المقارب) يصف السلوك الحدي لتابع عندما يسعى الوسيط نحو قيمة معينة أو اللانهاية عادة باستخدام توابع أبسط. يميز تدوين أوه الكبيرة التوابع وفقا لمعدلات النمو لديها: فقد تمثل التوابع المختلفة وذات معدل النمو الواحد باستخدام تدوين أوه نفسه.

على الرغم من أنها طوّرت كجزء من الرياضيات البحتة ، فإن هذا التدوين كثيرا ما يستخدم الآن في تحليل الخوارزميات لوصف استهلاك خوارزمية ما للموارد التحسيبية: غالباً ما يعبر عن وقت التشغيل واستخدام الذاكرة في الحال الأسوأ أو الحال المتوسط من قبل خوارزمية ما بوصفهما تابعين للدخل بإستخدام تدوين أوه الكبيرة. هذا يسمح لمصممي خوارزمية التنبؤ بسلوك الخوارزميات، وتحديد الخوارزمية المناسبة للاستخدام من بين عدة خوارزميات، وبطريقة مستقلة عن بنيان الحاسوب أو معدل الساعة. وبما أنه في تدون أوه الكبيرة تهمل ثوابت مضروبة بوقت التشغيل، وتُتجاهل كفاءة الخوارزمية لأحجام منخفضة من المدخلات، فإن لا يكشف دائما أسرع خوارزمية بالنسبة للممارسة العملية أو بالنسبة لمجموعات من البيانات ذات أحجام عملية. لكن هذا النهج يظل فعالاً للغاية للتوصل إلى التدرج بين الخوارزميات المختلفة عندما تصبح أحجام المدخلات كبيرة.

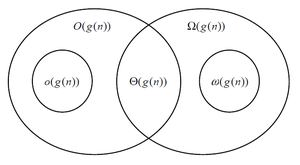

لا يعطي تدوين أوه الكبيرة لتابع موصف من خلاله تقدم سوى الحد الأعلى لمعدل نمو ذلك التابع. كما ترتبط بتدوين أوه الكبيرة تدوينات عدة، وذلك باستخدام الرموز o ، Ω ، ω ، وΘ ، لوصف أنواع أخرى من حدود معدلات النمو المقاربة. كما يستخدم تدوين أوه الكبيرة العديد من المجالات الأخرى لتقديم تقديرات مماثلة.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

التعريف الشكلي

ليكن لدينا التابعان f(x) و g(x) المعرفان على مجال فرعي ما من مجموعة الأعداد الحقيقة. نكتب:

إذا وفقط إذا، ومن أجل قيم كبيرة بما فيه الكفاية لـ x،كانت قيمة f(x) على الأكثر هي قيمة g(x) مضروبة بثابت ما.

هذا يعني، يكون f(x) = O(g(x)) إذا وفقط إذا وجد عدد حقيقي M وعدد حقيقي x0 بحيث::

In many contexts, the assumption that we are interested in the growth rate as the variable x goes to infinity is left unstated, and one writes more simply that f(x) = O(g(x)).

The notation can also be used to describe the behavior of f near some real number a (often, a = 0): we say

if and only if there exist positive numbers δ and M such that

If g(x) is non-zero for values of x sufficiently close to a, both of these definitions can be unified using the limit superior:

if and only if

Related asymptotic notations

Big O is the most commonly used asymptotic notation for comparing functions, although in many cases Big O may be replaced with Big Theta Θ for asymptotically tighter bounds. Here, we define some related notations in terms of Big O, progressing up to the family of Bachmann–Landau notations to which Big O notation belongs.

Little-o notation

The relation is read as " is little-o of ". Intuitively, it means that grows much faster than . It assumes that f and g are both functions of one variable. Formally, it states

Or alternatively:

Let f(x) and g(x) be two functions defined on some subset of the real numbers. One writes

if and only if, for each positive constant M, f(x) is at most M multiplied by g(x) in absolute value, for each x, which is large enough. That is, f(x) = o(g(x)) if and only if, for every , there exists a constant x0, such that

Note the difference between the earlier formal definition for the Big-O notation, and the present definition of little-o: while the former has to be true for at least one constant M the latter must hold for every positive constant[1].

For example,

Little-o notation is common in mathematics but rarer in computer science. In computer science the variable (and function value) is most often a natural number. In mathematics, the variable and function values are often real numbers. The following properties can be useful:

- (and thus the above properties apply with most combinations of o and O).

As with big O notation, the statement " is " is usually written as , which is a slight abuse of notation.

Family of Bachmann–Landau notations

| Notation | Name | Intuition | As , eventually... | Definition |

|---|---|---|---|---|

| Big Omicron; Big O; Big Oh | is bounded above by (up to constant factor) asymptotically | for some k | or | |

| (Note that papers in number theory have been increasingly and widely using this same notation, since the beginning of the 20th century, in the weaker sense that f = o(g) is false) | Big Omega | is bounded below by (up to constant factor) asymptotically | for some k | |

| Big Theta | is bounded both above and below by asymptotically | for some k1, k2 |

| |

| Small Omicron; Small O; Small Oh | is dominated by asymptotically | for every | ||

| Small Omega | dominates asymptotically | for every k | ||

| on the order of; "twiddles" | is equal to asymptotically |

Bachmann–Landau notation was designed around several mnemonics, as shown in the As , eventually... column above and in the bullets below. To conceptually access these mnemonics, "omicron" can be read "o-micron" and "omega" can be read "o-mega". Also, the lower-case versus capitalization of the Greek letters in Bachmann–Landau notation is mnemonic.

- The o-micron mnemonic: The o-micron reading of and of can be thought of as "O-smaller than" and "o-smaller than", respectively. This micro/smaller mnemonic refers to: for sufficiently large input parameter(s), grows at a rate that may henceforth be less than regarding or .

- The o-mega mnemonic: The o-mega reading of and of can be thought of as "O-larger than". This mega/larger mnemonic refers to: for sufficiently large input parameter(s), grows at a rate that may henceforth be greater than regarding or .

- The upper-case mnemonic: This mnemonic reminds us when to use the upper-case Greek letters in and : for sufficiently large input parameter(s), grows at a rate that may henceforth be equal to regarding .

- The lower-case mnemonic: This mnemonic reminds us when to use the lower-case Greek letters in and : for sufficiently large input parameter(s), grows at a rate that is henceforth inequal to regarding .

Aside from Big O notation, the Big Theta Θ and Big Omega Ω notations are the two most often used in computer science; the Small Omega ω notation is rarely used in computer science.

Informally, especially in computer science, the Big O notation often is permitted to be somewhat abused to describe an asymptotic tight bound where using Big Theta Θ notation might be more factually appropriate in a given context. For example, when considering a function , all of the following are generally acceptable, but tightnesses of bound (i.e., bullets 2 and 3 below) are usually strongly preferred over laxness of bound (i.e., bullet 1 below).

- T(n) = O(n100), which is identical to T(n) ∈ O(n100)

- T(n) = O(n3), which is identical to T(n) ∈ O(n3)

- T(n) = Θ(n3), which is identical to T(n) ∈ Θ(n3).

The equivalent English statements are respectively:

- T(n) grows asymptotically no faster than n100

- T(n) grows asymptotically no faster than n3

- T(n) grows asymptotically as fast as n3.

So while all three statements are true, progressively more information is contained in each. In some fields, however, the Big O notation (bullets number 2 in the lists above) would be used more commonly than the Big Theta notation (bullets number 3 in the lists above) because functions that grow more slowly are more desirable. For example, if represents the running time of a newly developed algorithm for input size , the inventors and users of the algorithm might be more inclined to put an upper asymptotic bound on how long it will take to run without making an explicit statement about the lower asymptotic bound.

Extensions to the Bachmann–Landau notations

Another notation sometimes used in computer science is Õ (read soft-O): f(n) = Õ(g(n)) is shorthand for f(n) = O(g(n) logk g(n)) for some k. Essentially, it is Big O notation, ignoring logarithmic factors because the growth-rate effects of some other super-logarithmic function indicate a growth-rate explosion for large-sized input parameters that is more important to predicting bad run-time performance than the finer-point effects contributed by the logarithmic-growth factor(s). This notation is often used to obviate the "nitpicking" within growth-rates that are stated as too tightly bounded for the matters at hand (since logk n is always o(nε) for any constant k and any ε > 0).

The L notation, defined as

is convenient for functions that are between polynomial and exponential.

انظر أيضاً

- Asymptotic expansion: Approximation of functions generalizing Taylor's formula

- Asymptotically optimal: A phrase frequently used to describe an algorithm that has an upper bound asymptotically within a constant of a lower bound for the problem

- Limit superior and limit inferior: An explanation of some of the limit notation used in this article

- Nachbin's theorem: A precise method of bounding complex analytic functions so that the domain of convergence of integral transforms can be stated

- Big O in probability notation : Op,op

قراءة متقدمة

- Paul Bachmann. Die Analytische Zahlentheorie. Zahlentheorie. pt. 2 Leipzig: B. G. Teubner, 1894.

- Edmund Landau. Handbuch der Lehre von der Verteilung der Primzahlen. 2 vols. Leipzig: B. G. Teubner, 1909.

- G. H. Hardy. Orders of Infinity: The 'Infinitärcalcül' of Paul du Bois-Reymond, 1910.

- Marian Slodicka (Slodde vo de maten) & Sandy Van Wontergem. Mathematical Analysis I. University of Ghent, 2004.

- Donald Knuth. The Art of Computer Programming, Volume 1: Fundamental Algorithms, Third Edition. Addison-Wesley, 1997. ISBN 0-201-89683-4. Section 1.2.11: Asymptotic Representations, pp. 107-123.

- Thomas H. Cormen, Charles E. Leiserson, Ronald L. Rivest, and Clifford Stein. Introduction to Algorithms, Second Edition. MIT Press and McGraw-Hill, 2001. ISBN 0-262-03293-7. Section 3.1: Asymptotic notation, pp. 41–50.

- Michael Sipser (1997). Introduction to the Theory of Computation. PWS Publishing. ISBN 0-534-94728-X. Pages 226–228 of section 7.1: Measuring complexity.

- Jeremy Avigad, Kevin Donnelly. Formalizing O notation in Isabelle/HOL

- Paul E. Black, "big-O notation", in Dictionary of Algorithms and Data Structures [online], Paul E. Black, ed., U.S. National Institute of Standards and Technology. 11 March 2005. Retrieved December 16, 2006.

- Paul E. Black, "little-o notation", in Dictionary of Algorithms and Data Structures [online], Paul E. Black, ed., U.S. National Institute of Standards and Technology. 17 December 2004. Retrieved December 16, 2006.

- Paul E. Black, "Ω", in Dictionary of Algorithms and Data Structures [online], Paul E. Black, ed., U.S. National Institute of Standards and Technology. 17 December 2004. Retrieved December 16, 2006.

- Paul E. Black, "ω", in Dictionary of Algorithms and Data Structures [online], Paul E. Black, ed., U.S. National Institute of Standards and Technology. 29 November 2004. Retrieved December 16, 2006.

- Paul E. Black, "Θ", in Dictionary of Algorithms and Data Structures [online], Paul E. Black, ed., U.S. National Institute of Standards and Technology. 17 December 2004. Retrieved December 16, 2006.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

روابط خارجية

- ^ Thomas H. Cormen et al., 2001, Introduction to Algorithms, Second Edition